If you're looking to swap your old iPhone for a spanking new model like the iPhone 14, Apple's official Trade In program is one of the simplest ways to do it. But thanks to some recent downgrades to its trade-in values, it's also now one of the worst value trade-in services out there.

As spotted by MacRumors, some models of iPhone are now worth significantly less on trade-ins than they were last week. The worst hit, the iPhone 13 Pro series, saw its trade-in price drop by $80 in the US and Apple is offering similar prices in the UK. The iPhone 13's trade-in value also went down by $50.

While Apple hasn't cut its valuations for every iPhone, its full list of trade-in prices underlines that there is a cost to the convenience of offloading your old phone directly through the manufacturer. Apple's trade-ins aren't necessarily recycled – it says that if your phone is "in good shape", it'll "help it go to a new owner". And the price of doing that is factored into the prices it offers for old iPhones.

So what exactly are your alternatives? While the best choice depends on which iPhone you're looking to get, the table below shows there are some financial benefits in shopping around for trade-in offers.

The good news is that, compared to Android phones, iPhones generally hold their value better – a US trade-in comparison site said that a used iPhone lost 68.8% of its value last year, compared to 84.2% for Samsung and 89.5% for Google.

If you have a particularly old iPhone that's no longer working properly, you may still prefer to trade it in through Apple safe in the knowledge that it'll go to one of the manufacturer's approved recycling partners. But if you're looking to get a solid discount off your next phone, or perhaps even a free Apple Watch, these are the places to consider.

1. Check your phone carrier

If you bought your current iPhone through a network operator and are looking to stay with them, there are some impressive trade-in offers available that may well trump going through a reseller or selling privately.

These typically give you account credit or a promotion card to put towards a new one, or offer free recycling. Here are some links to the major ones in the US and UK.

US network trade-in schemes

UK network phone trade-in schemes

Not everyone will be on a pricey unlimited plan and be looking to buy a brand-new iPhone 14, but if you are there are some impressive trade-in deals like the one below.

Impressively, Verizon is offering a free Apple Watch 7 and an extra $200 off an iPad when you trade an iPhone in, on top of the usual rebate. For more deals like that one, check out our guide to the best iPhone deals.

Apple iPhone 14: up to $800 off with trade, plus free Apple Watch at Verizon

Verizon's iPhone deals offer the usual trade-in rebate of up to $800 off on the iPhone 14 this week - a great promotion, but nothing too special. What's really sweetening the deal this week is that the carrier is also offering a free Apple Watch 7 and an additional $200 off an iPad as a bonus promotion to the trade-in, which is absolutely awesome value. While you'll still need that pricey unlimited plan to take part here, grabbing some freebies on top of the usual device saving is a great option. View Deal

2. Use trade-in comparison sites

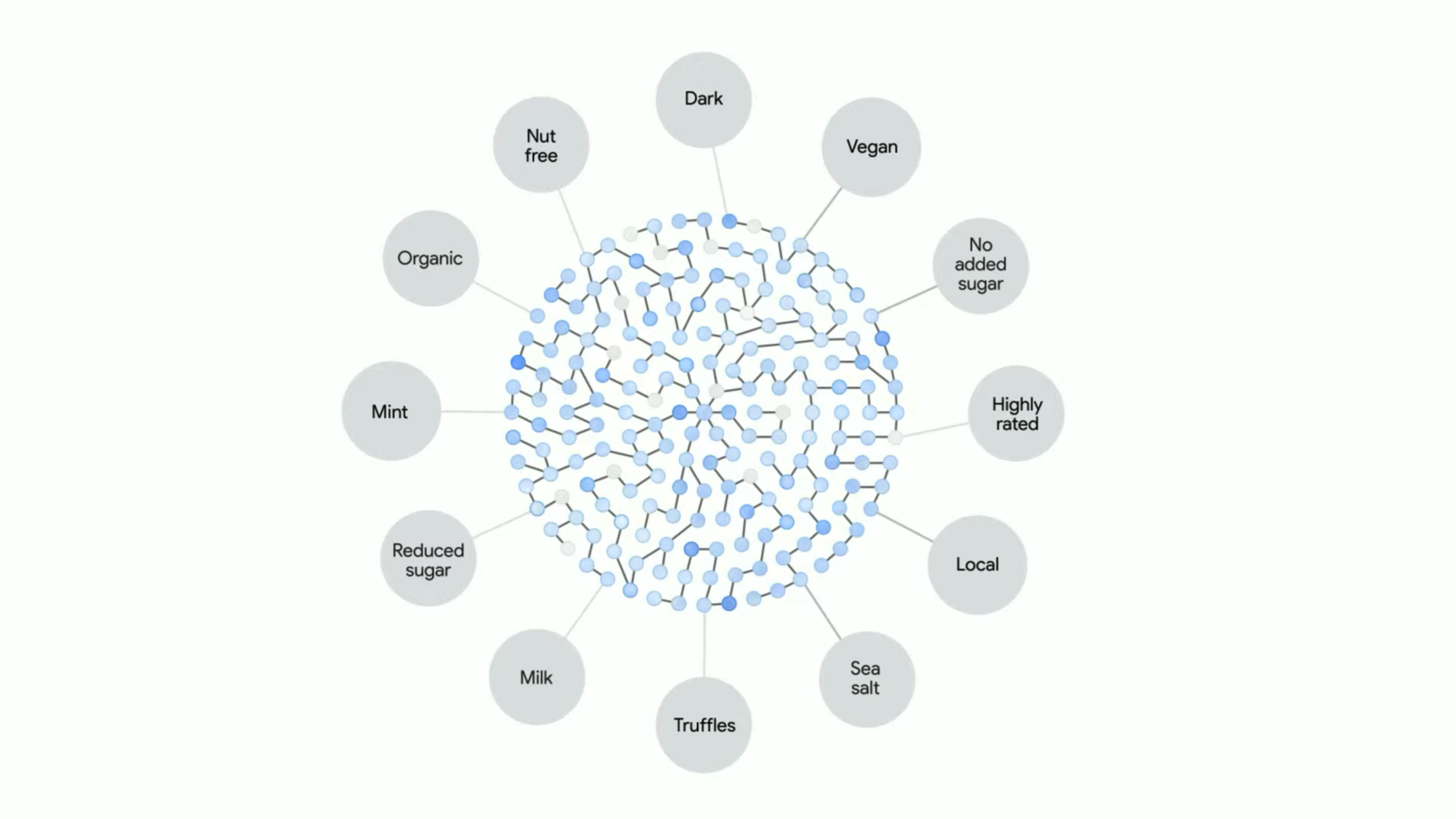

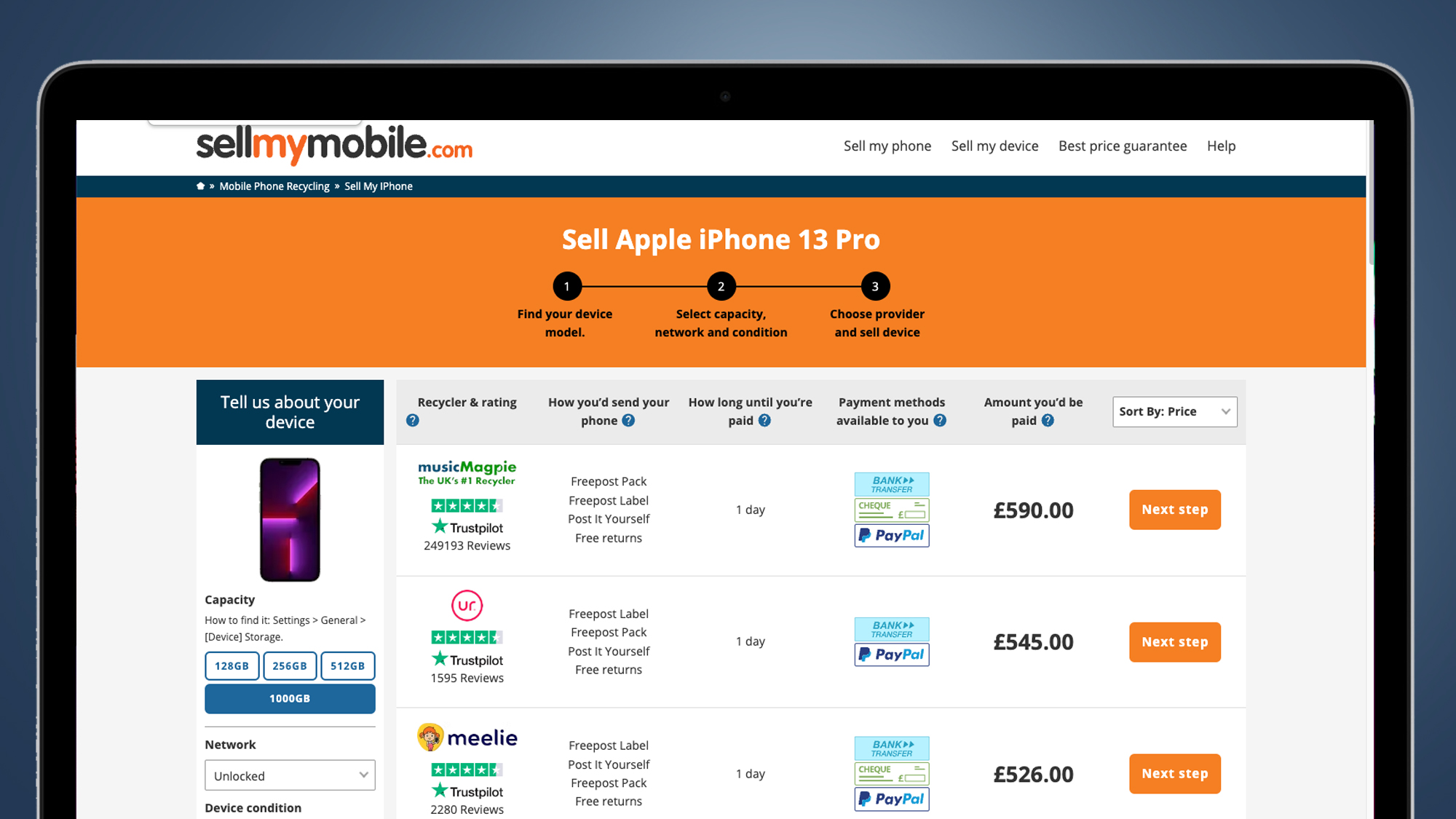

If you don't have time to trawl through every mobile reseller, trade-in comparison sites can give you a quick temperature check on what your iPhone could fetch at the many reselling rivals to Apple's program.

In the US, Flipsy is a handy place to see how much your phone is worth and offers free shipping, while in the UK some of the best options are Sell My Mobile and Compare and Recycle.

You might be able to find a better price for your iPhone by going directly to the resellers rather than via a comparison site, but they're a good way to quickly check how much more your iPhone might be able to fetch elsewhere.

3. Go direct to trade-in and recycling services

Another bonus of being an iPhone owner who's for a trade-in deal is that most of the major third party sites have a strong focus on Apple, alongside Samsung. So where should you get your online quotes?

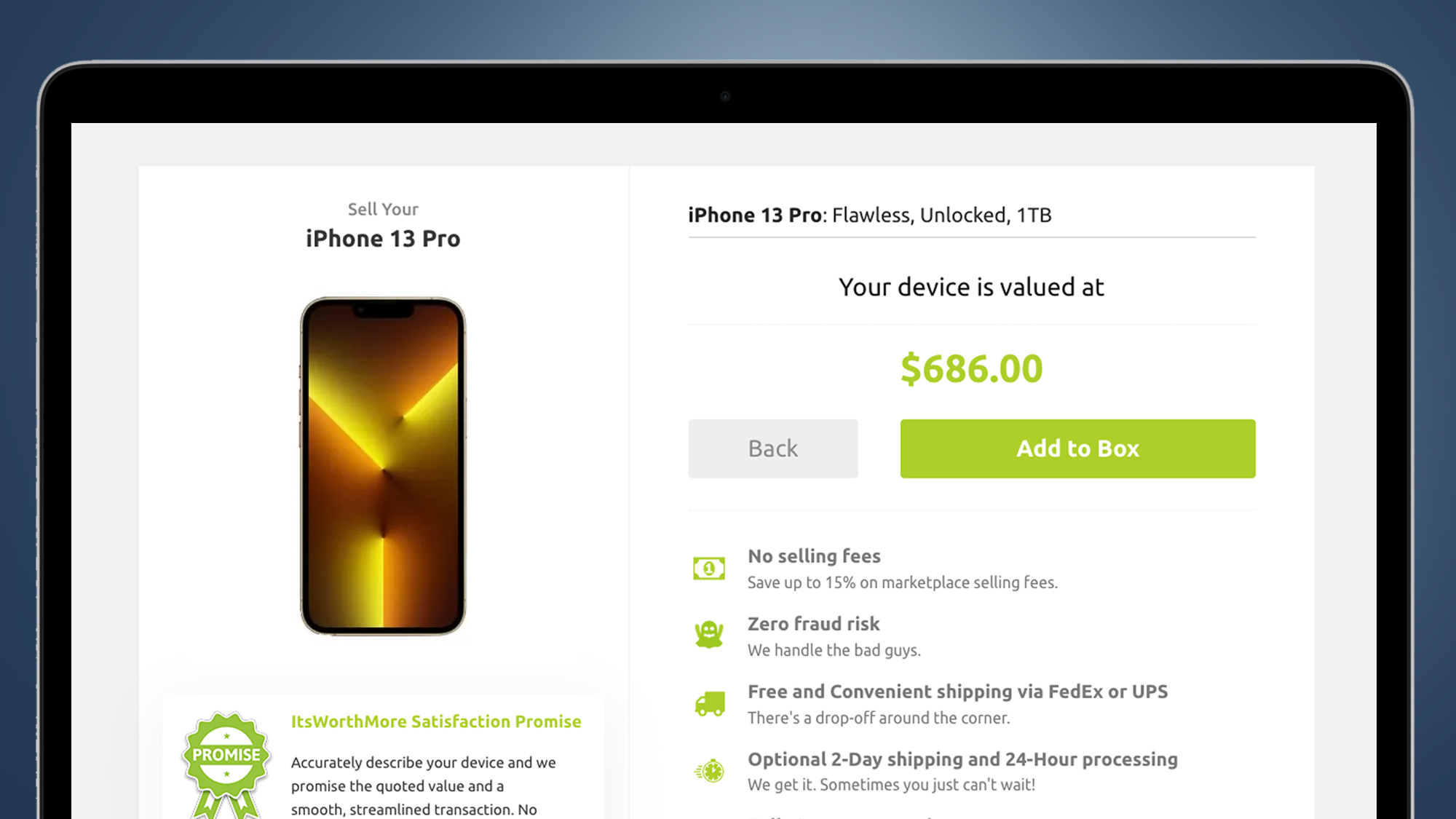

In the US, ItsWorthMore is a reliable and long-standing place to sell your iPhone (along with tablets and laptops). EcoATM (formerly known as Gazelle) is also a simple option, while USell will happily quote you for a broken or damaged iPhone that may not qualify for other trade-in programs.

If you're in the UK, Carphone Warehouse offers competitive trade-in prices (as you can see in the table above), while CeX will give you valuations for credit in its physical stores.

For recycling options, check out EcoATM and Best Buy (in the US) or Fonebank (in the UK). Some networks in the UK have also set up schemes for you to donate your old iPhone to someone in need – see Three's Reconnected and Vodafone's Great British Tech Appeal for good examples of those.

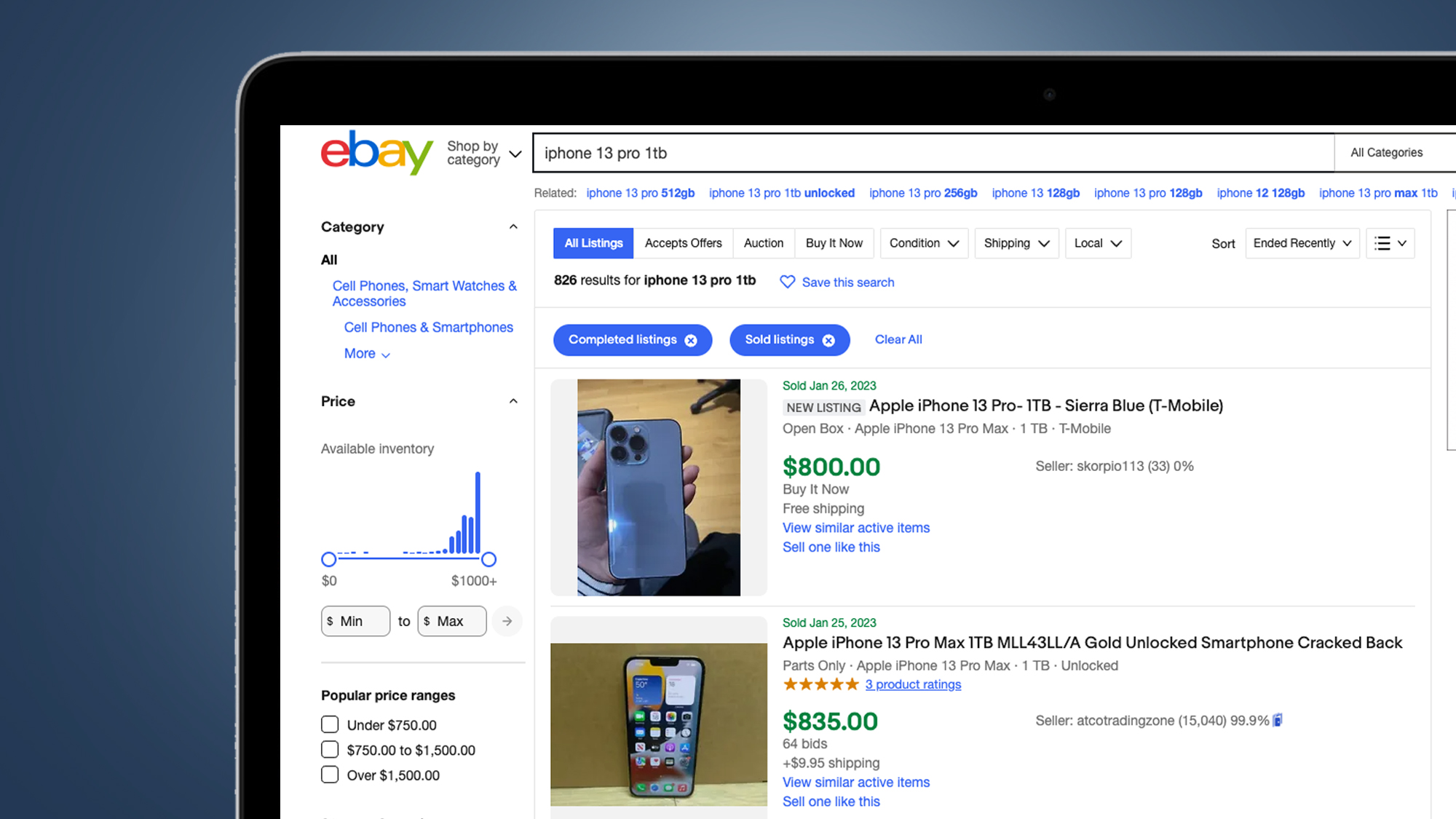

4. Sell privately

Naturally, selling an old iPhone yourself brings a higher potential price ceiling, but also the most hassle. Avoiding the latter is a big part of the appeal of trade-in services like the ones above.

Still, if you're happy to field dozens of potential questions from interested buyers in order to squeeze the most value from that phone, selling privately remains a profitable alternative to Apple's Trade In program.

The two big guns remain eBay and FaceBook Marketplace, due to their vast audiences and secure payment setups. When should you sell? While it can leave you with a tricky period between having your old phone and getting a new one, the best times to flog an old iPhone are typically August (which is at least a month before Apple usually announces new models), with the worst being late September.

Whichever way you decide to go, make sure you read our excellent tips on how to sell or trade in your old smartphone, which we gathered after an insightful chat with the experts at Backmarket.