We first caught a glimpse of Minecraft RTX way back at Gamescom 2019, where Nvidia was keen to show off how far ray tracing had come in the year since Nvidia Turing first hit the market. However, it's been close to a year since Nvidia first showed what ray tracing could look like in Minecraft, and we're only just now seeing it enter beta.

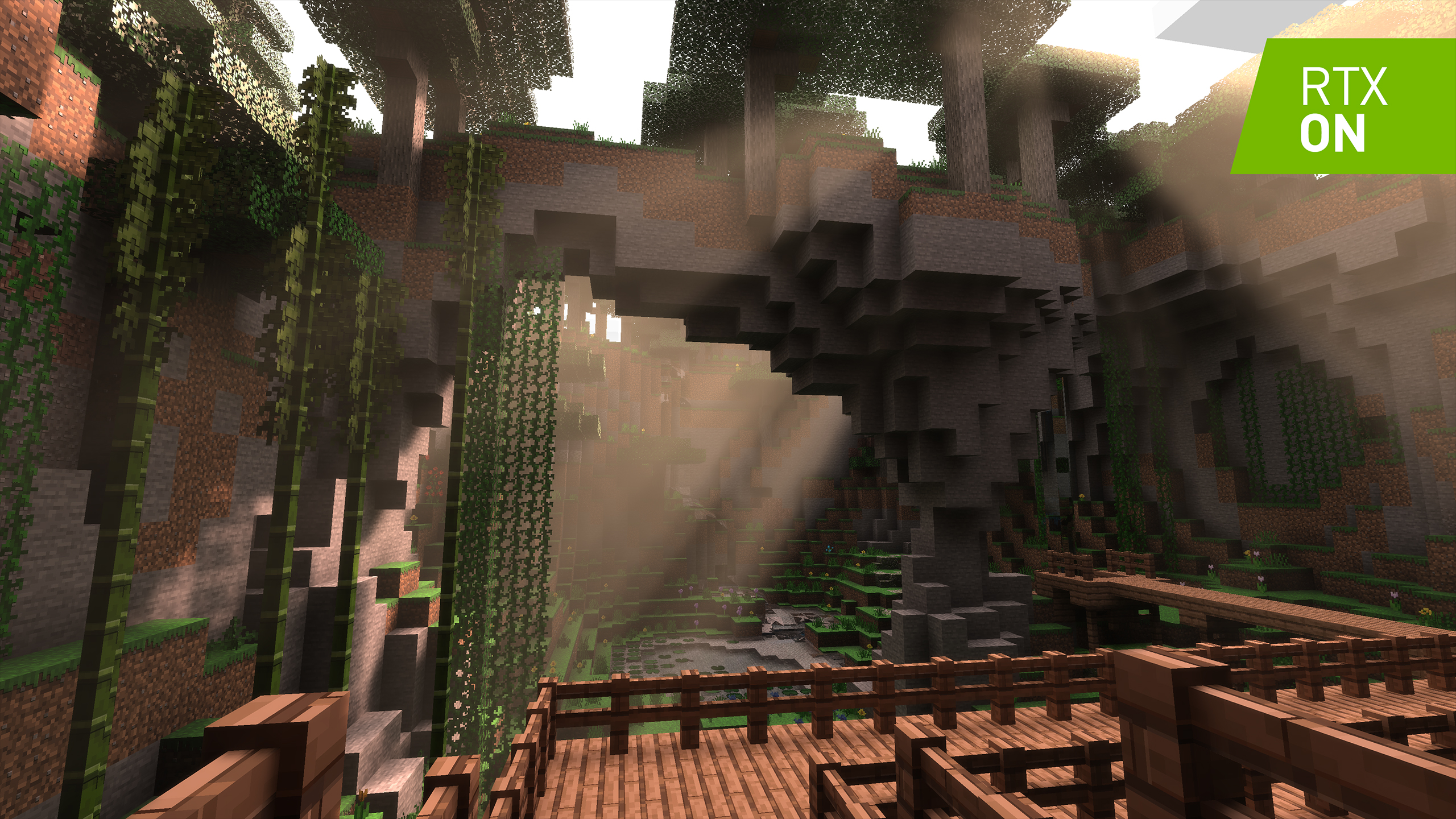

We've been playing the beta for a couple days now and based on our first impressions, we can easily say that it, alongside Quake II RTX, makes for a stunning example of how much of a difference ray tracing can make.

And, sure, it can be argued that ray tracing in a game as visually basic as Minecraft is a waste of effort, but it's that basic nature that really makes the difference in lighting pop. Ray tracing, in effect, makes Minecraft look like an entirely different game, and we've got the screenshots to prove it.

When can I play Minecraft RTX

First things first, if you're looking to get in on the Minecraft RTX action yourself, you're in luck. The Minecraft RTX beta begins today, and anyone can sign up for it – but it's a bit of a process.

Quick note: if you plan to do any of this, be sure to back up your worlds by going to the following folder and copying any files you need to keep.

- %LOCALAPPDATA%PackagesMicrosoft.MinecraftUWP_8wekyb3d8bbweLocalStategamesco m.mojangminecraftWorlds

Minecraft RTX is only available on Windows 10, so you're going to need to own that version to access it. If you want into the beta, download the Xbox Insider Hub app from the Windows 10 Store, open it and click the three horizontal lines in the top left corner.

From there, click on 'Insider Content' and then click on Minecraft for Windows 10. From there just click Join. From there, you can click on 'Manage' and choose whether you want a part of the regular Minecraft Beta program or just the Minecraft RTX Beta.

Now that that's done, be sure to download the game ready driver from GeForce Experience or Nvidia's website.

What do you need to play Minecraft RTX?

Because we're talking about ray tracing and AMD RDNA 2 GPUs with hardware-enabled ray tracing are releasing who-knows-when, you need an Nvidia RTX graphics card to really take advantage of these new effects.

Nvidia told us that an Nvidia GeForce RTX 2060 is required for "playable" framerates at 1080p, which it defined as "30 fps". Keep in mind, however, that this is with DLSS 2.0 enabled, which, to the uninitiated, is Nvidia's AI-based upscaling technology.

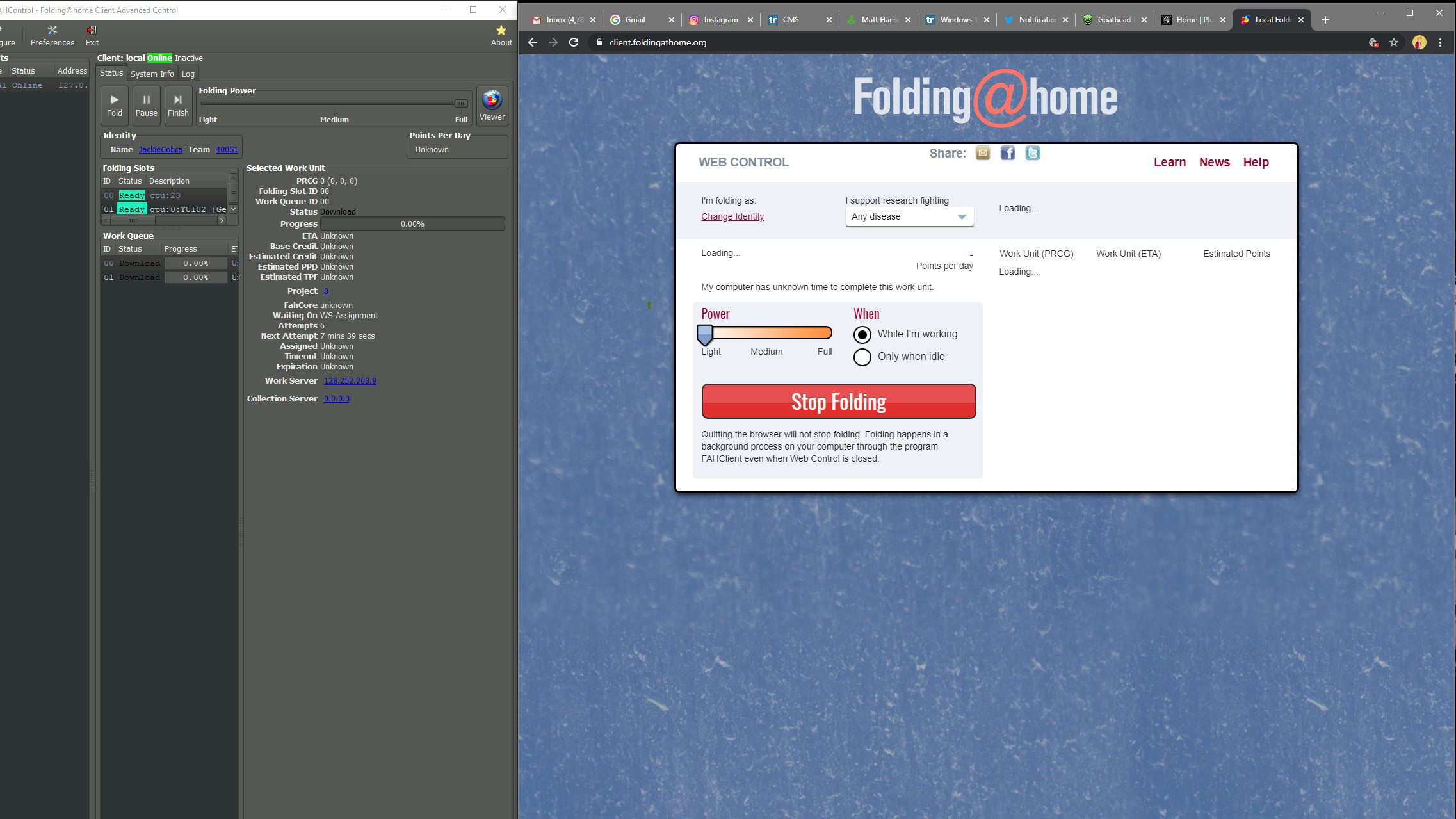

We didn't do full performance testing of Minecraft RTX like we typically do with major game launches (see Resident Evil 3 and Doom Eternal performance), because it was a beta. However, that doesn't mean that we ignored our performance.

We played the game on our home gaming PC, which is packed with an AMD Ryzen 9 3900X, 32GB of RAM and an Nvidia GeForce RTX 2080 Ti – as close to top-end as you can get right now without breaking into the HEDT world. And, even with this extravagant level of hardware, we didn't manage to break a 60 fps average at 3,440 x 1,440 with ray tracing enabled.

That being said, when we turned off the DLSS upscaling, framerates tanked all the way down to the mid-20s, so we would say that enabling DLSS is basically required with Minecraft RTX.

Keep in mind that this is just a beta, however, so performance can get a lot better over time as the talented developers over at Mojang work out the kinks and get optimization down.

Until then, however, we'd advise sticking with 1080p or 1440p unless you're one of the few folks that has an RTX 2080 Ti installed.

Does Minecraft RTX really make that much of a difference?

We definitely encourage you to download and install the beta if you really want to see how much of a difference ray tracing makes with Minecraft, but we can personally attest that it makes it look like an entirely different game.

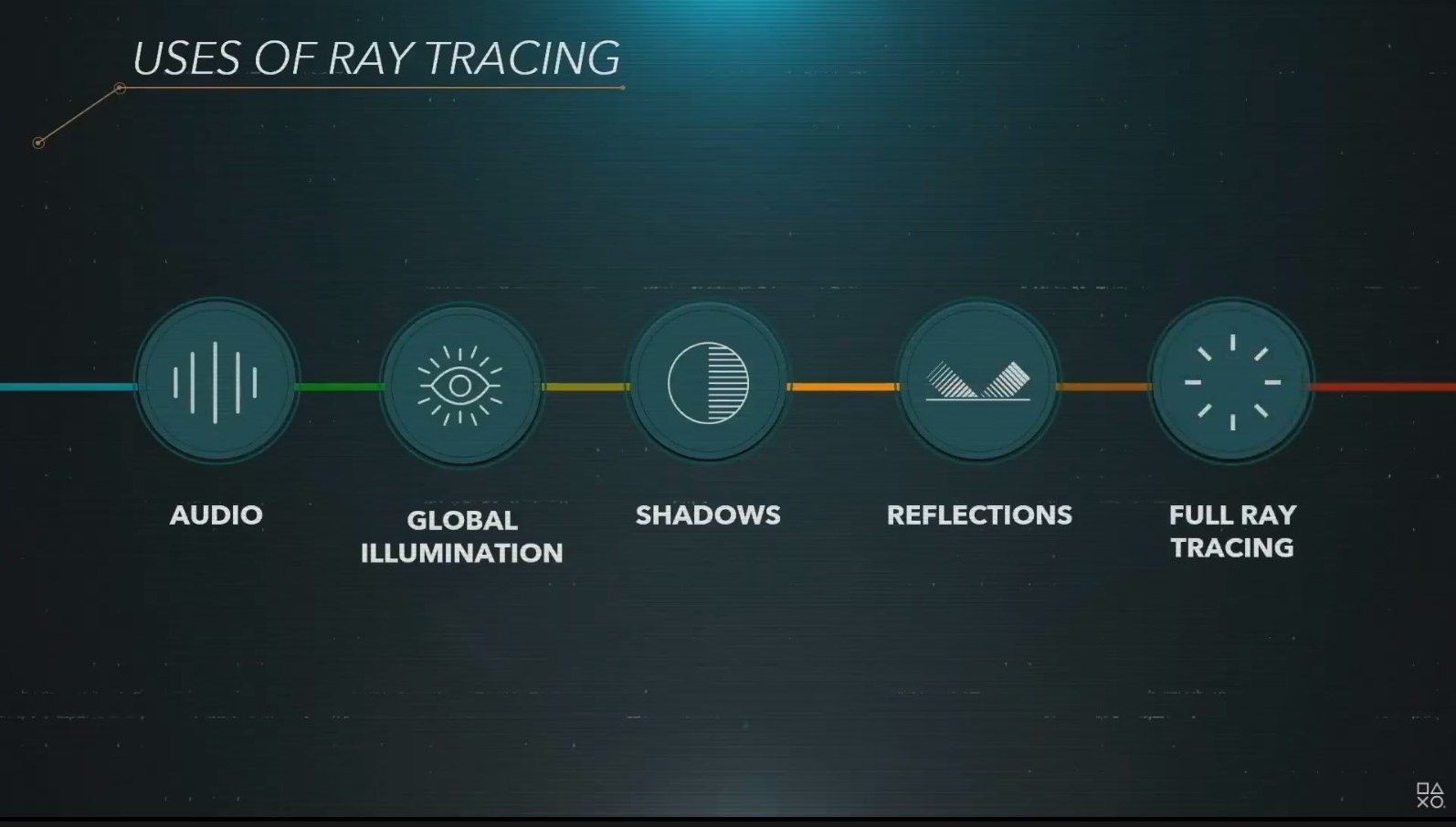

The inclusion of ray traced global illumination, shadows and reflections here makes every single object in the game look so much better. Items that create light are more important, as their placement in your project is going to determine whether or not a cave or a room is going to have enough light to see.

One of the demo worlds that Nvidia provided to us had this giant castle, and just for laughs we went through and removed all of the torches. It got so dark that we were reminded of the illumination tech found in Metro Exodus, which had really sold us on this whole ray tracing thing when we saw it in action.

We can go on all day about how jaw-dropping the difference is, but screenshots are going to tell the story better anyways, so here you go. And, again, we encourage you to try it out for yourself, because this editor has never played Minecraft before writing this article, and they don't know how to really get the most out of this technology here.

So, yeah: Minecraft with RTX is pretty incredible. While the game isn't as bombastic as Control or Metro Exodus, we think it's a neat way to show off what the tech can do.

Plus, in a game that revolves so much around creating whatever folks want to create, having more tools to do that can't hurt. Frankly, while we probably won't be playing much Minecraft in the future, we're ecstatic to see what people create with it.