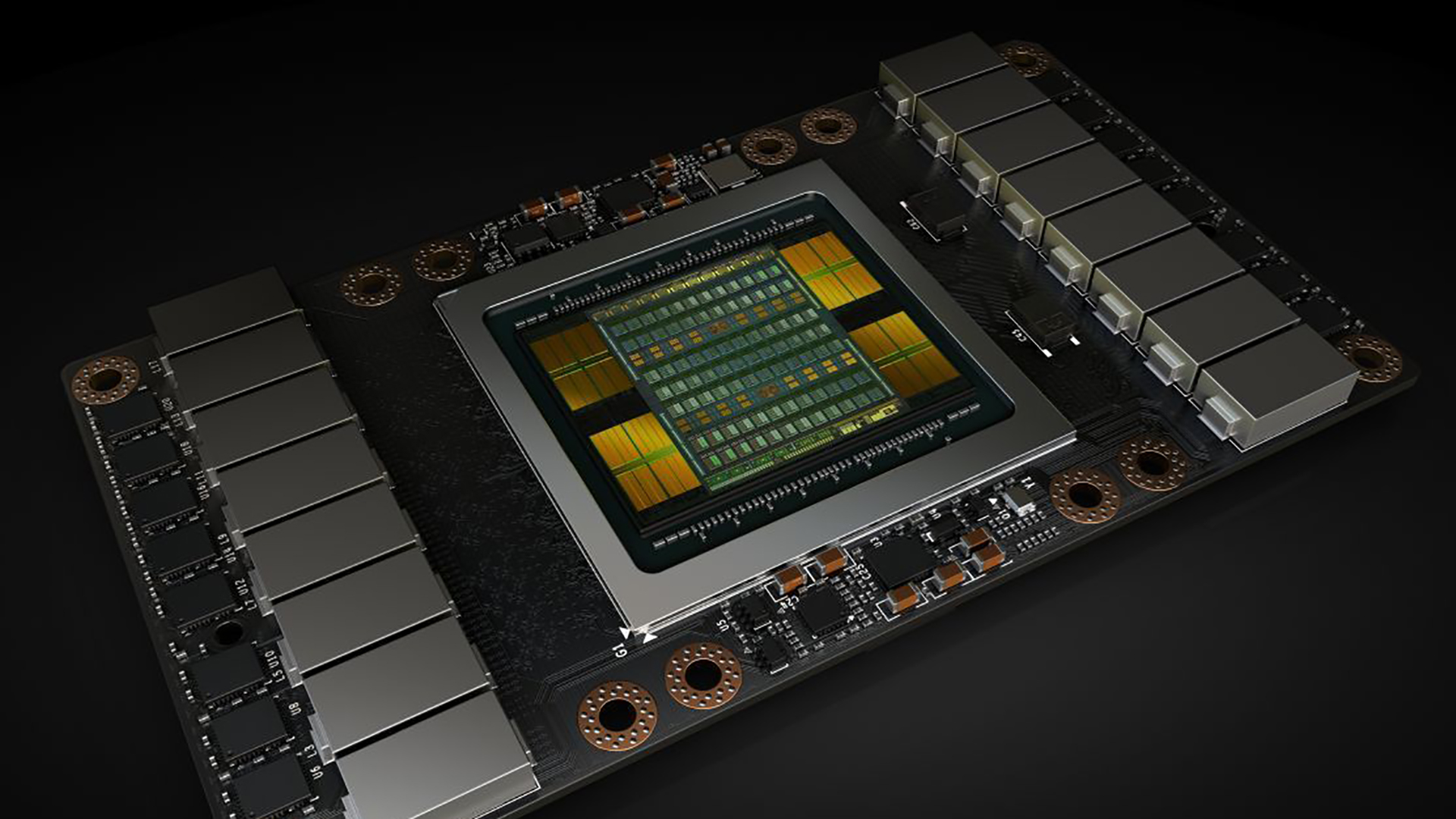

Recently, rumors surrounding the next Nvidia graphics cards have been everywhere, and it's extremely likely that we're going to see new ones before the year draws to a close. However, while some of the rumors surrounding the Nvidia GeForce RTX 3080 and other potential graphics cards like it seem extremely solid, others skew to the ridiculous side of the spectrum.

There is one particular Nvidia Ampere rumor that keeps appearing that gets my attention, however: a supposed Nvidia GeForce RTX 3090. But here's the thing: it's probably not going to happen.

It'd be easy to just point at the Nvidia Turing lineup and say "hey, there was no RTX 2090, so there won't be an RTX 3090", but it runs a bit deeper than that. So, let's do a nice bit of deconstruction.

Back to the 90s

The last Nvidia graphics card we got with the xx90 name was the Nvidia GeForce GTX 690 all the way back in 2012. The 690, just like the GTX 590 before it, was a dual-GPU card, which basically meant that users could have a sick SLI configuration while only taking up one PCIe slot.

Nvidia wasn't alone in releasing graphics cards like this back then, as AMD was in on the action too. The AMD Radeon HD 7990, for instance, came out in 2013, and was probably the last dual-GPU card that could be considered mainstream – with products like the R9 295x2 being way too expensive for everyday users to even consider.

It's not a coincidence that Nvidia dropped the xx90 naming scheme after the 690, either. It wasn't Nvidia's last dual-GPU card, that honor would go to the Nvidia Titan Z, but again, that card, along with the rest of the Titan lineup, is meant for creative workloads, rather than gaming.

Some may point to the AMD Radeon RX 590, of course, as that GPU launched just a few years ago. But again, that card was essentially just an AMD Radeon RX 580 with faster memory and an overclock.

If you want to know more about the history of these dual GPU graphics cards, here's a video from Tech Showdown that goes into some pretty exhaustive detail, going all the way back to the Voodoo II:

That single GPU life

Since the AMD Radeon HD 7990 and Nvidia GeForce GTX 690, it seems like any dual GPUs that came out were separate from the main graphics card lineup from either GPU manufacturer, and there's definitely a reason for that.

I reached out to Nvidia and it told me that the question ultimately boils down to diminishing returns. I was told that "dual GPUs are expensive to produce and require adequate cooling and thermal/acoustics. There’s also a limited market for them."

The best graphics cards are already getting more and more expensive – you just have to look at the Nvidia GeForce RTX 2080 Ti for evidence of that. That graphics card launched at $1,199 (£1,099, AU$1,899), with a whopping 250W TDP. Nvidia even had to adopt a dual-fan design with its Founders Edition cards with this generation, and in our testing, temperatures still get super-hot.

But, it's a bit more complicated than "it's expensive and hot". Games are built differently then they were a decade ago, and with the advent of DirectX 12, the onus is on developers to bake in support for multi-GPU configurations.

When I asked Nvidia about this I was told "SLI was a huge hit for us when we launched it 16 years ago. At that time however, there were no APIs to support game scaling, so NVIDIA did all of the heavy lifting in our software drivers." In fact, SLI was even successful and "folks were eager to pair two GPUs in their system using the SLI bridge. A dual GPU like the 690, is essentially the same thing, just on a single PCB (board)."

But because of the change in software support, things have changed, and that comes from APIs changing from implicit mGPU support to explicit mGPU support. With the former, multi GPU support came down to Nvidia: it would update graphics drivers with profiles for different games, making sure that SLI configurations worked well. However, with explicit mGPU, that shifts over to the game developers, who have to bake support straight into the game engine – while Nvidia still has to do a lot of heavy lifting on its end, too.

So, as Nvidia puts it: "explicit mGPU is rarely worth the time for the developer since the scaling result isn’t worth the investment. Plus, as our GPUs have become more powerful, and capable of doing 4K over 60fps as an example, there’s really no compelling reason to build a card with two GPUs."

It's impossible to completely rule out a future dual-GPU card entirely, but it doesn't seem very likely that we'll see one again in the near future, at least until 8K gaming becomes a thing, which will probably be a long time.

Does it have to be dual GPU?

Now that we're back in the world of speculation, it's possible that Nvidia will just put out a single-GPU card with xx90 branding and call it a day. I don't think that's going to happen either.

One of my colleagues pointed out the Intel Core i9-9900K and AMD Ryzen 9 3900X as an example, saying that could point to a shift in the components industry to having the number "9" as branding. But I think there's some important context missing here.

Now, this is entirely my interpretation of the Coffee Lake Refresh release, but the Core i9-9900K appeared after AMD Ryzen 2nd generation processors like the Ryzen 7 2700X started threatening Intel's long standing desktop superiority.

The Intel Core i9 branding was around before that, but only on HEDT processors like the Intel Core i9-7980XE – there was no 8th-generation X-series chips... oops. However, when faced with AMD's rising competition and a manufacturing process that was – and still is – stagnant, Intel had to bring in the big guns with the Core i9-9900K.

And, in turn, AMD responded with the AMD Ryzen 9 3900X and the Ryzen 9 3950X, both of which remain unchallenged by Intel nearly a full year after Ryzen 3000's initial launch.

While the Core i9 and Ryzen 9 branding is probably here to stay, that moment hasn't really happened in the graphics card market yet. AMD RDNA 2 is somewhere off on the horizon promising to bring ray tracing and 4K gaming to AMD Radeon users, but it's not here yet. Assuming both AMD and Nvidia are dropping new graphics cards this year, Nvidia doesn't really have much of a reason to shift up that branding.

The biggest rumor pointing to the RTX 3090 revolves around the idea that there will be three graphics cards using the top-end GA102 GPU, but, like Nvidia Titan is a thing. If I had to just guess what those three graphics cards would be, I'd suggest an RTX 3080 Ti, and if anything two Titan cards.

Nvidia has been going all-in on creators over the last couple years, especially with its RTX Studio program, so my money is still on one halo flagship gaming card, with any other GA102 GPUs – if that's even what it's called – being reserved for pro-level Nvidia Titan graphics cards.

Still, this is all speculation. So, if this is wrong, Nvidia – prove it.

- We'll show you how to build a PC